GPU Instancer:Terminology

About | Features | Getting Started | Terminology | Best Practices | F.A.Q.

In this page, you can find information about the concepts and terminology that is used by GPU Instancer. GPUI works out of the box with almost no required setup, but if you are not familiar with the concepts that are utilized by GPUI, reading these sections will help you get the most performance out of using GPUI by finding the right settings for your scenes.

Contents

GPU Instancing

GPU instancing is a batching technique that you can use to improve the performance of your games. It is a relatively new technique and usually it is supported by the modern Graphics Devices.

Without any kind of batching, to render a mesh with a material on the screen, all the meshes are sent one by one to the GPU and rendered with their corresponding materials/shaders. Thus, each mesh/material combination is without batching issues a draw call.

GPU instancing basically works by sending mesh/material data to the GPU in a single draw call. This reduces the amount of batching to one even if there are hundreds or even thousands of the same mesh/material combination. This removes the bottleneck that occurs when sending mesh and material data from CPU to the GPU, and results in higher fps if there are many of the same mesh-material combinations. That is, GPU instancing helps in scenarios where you have many instances of the same game object.

GPU Instancer further builds on this concept by applying various culling and LOD operations directly in the GPU and rendering only the relevant instances on the screen. This is why frustum and occlusion culling systems included with GPU Instancer are designed to work with GPU instancing only. What they do is to reduce the number of objects drawn for each prototype that are defined on the GPUI Managers. So let's say you have 1000 instances of a prefab - to draw these, GPU Instancer makes only 1 draw call with the count 1000 (this count is stored in GPU memory). When you use frustum and/or occlusion culling, this count is modified in GPU according to culling results. So it makes 1 draw call with a count less than 1000 - and rendering speed up further because there are less objects that are actually rendered.

The Unity Material Option

Limited instancing, maximum batch counts.

Instancing vs Indirect Instancing

DrawMeshInstanced vs DrawMeshInstancedIndirect

Compute Shaders

Frustum Culling

Without any culling, the graphics card would render everything in the scene; whether the rendered objects are actually visible or not. Since the more geometry the graphics card renders, the slower it will be rendering them - various techniques are used not to render the unnecessary geometry. "Culling" is an umbrella term that is used when some objects are decided not to be rendered based on a rule.

Frustum culling is a culling technique that checks the camera's frustum planes and culls the objects that do not fall into these planes. These objects are culled because they are not visible by the camera.

GPU Instancer does all the camera frustum testing and the required culling operations with compute shaders in the GPU before rendering the instances. Because of this, the culling operations are both faster and they do not take any time in the CPU allowing for more room to game scripts in the CPU.

Please note that GPUI currently uses only the camera that is defined on a manager to do frustum culling. If the manager is auto-detecting the camera, this will be the first camera in the scene with the MainCamera tag. What this means is that frustum culling will currently work only for this camera. If your scene requires multiple cameras rendering at the same time, you can turn off the frustum culling feature from the manager. If you are using multiple cameras but not rendering with them at the same time, you can simply call SetCamera(camera) method from the GPUInstancerAPI after you switch your camera and GPUI will start handling all its operations on this new camera.

Occlusion Culling

In most games, the camera is dynamic - it captures the game world from different angles. So for example in one camera position, the grass behind a house can be visible - and yet in another one it may not. However, with only frustum culling, the grass will be rendered as long as it stays inside the camera frustum - even though it may actually be hidden behind the house. This happens because objects are drawn from farthest first to closest last in Unity (and 3D graphics in general). The closer ones are thus drawn on top of the farther ones. This is referred to as overdraw.

Occlusion Culling is a technique that targets this concern. It lightens the load on the GPU by not rendering the objects that are hidden behind other geometry (and therefore are not actually visible).

As opposed to frustum culling, occlusion culling does not happen automatically in Unity. For an overview of the Unity's default occlusion culling solution, you can take a look at this link:

https://docs.unity3d.com/Manual/OcclusionCulling.html

This solution has two major drawbacks: (a) that you need to prepare (bake) your scene's occlusion data before using it and (b) that you need your occluding geometry to be static to be able to do so. That effectively means that objects that move during playtime cannot be used for occlusion culling.

GPUI comes with a GPU based occlusion culling solution that aims to remove these limitations while increasing performance. The algorithm used for this is known as the Hierarchical Z-Buffer (Hi-Z) Occlusion Culling. In effect, GPUI allows for its defined prototypes' instances to be culled by any occluding geometry, and without baking any occlusion maps or preparing your scene.

Just like its frustum culling solution, GPUI does the required testing and culling operations with compute shaders in the GPU before rendering the instances. And again, because of this, the culling operations are both faster and they do not take any time in the CPU allowing for more room to game scripts in the CPU.

However, please note that the Hi-Z occlusion culling solution introduces additional operations in the compute shaders. Although GPUI is optimized to handle these operations efficiently and fast, it would still create unnecessary overhang in scenes where the game world is setup such that there is no gain from occlusion culling. A good example of this would be top-down cameras where almost everything is always visible and there are no obvious occluding objects. For more information on this point, please check the occlusion culling section in the Best Practices Page.

Please also note that, just as with frustum culling, GPUI currently uses only the camera that is defined on a manager to do occlusion culling. If the manager is auto-detecting the camera, this will be the first camera in the scene with the MainCamera tag. What this means is that occlusion culling will currently work only for this camera. If your scene requires multiple cameras rendering at the same time, you can turn off the occlusion culling feature from the manager. If you are using multiple cameras but not rendering with them at the same time, you can simply call SetCamera(camera) method from the GPUInstancerAPI after you switch your camera and GPUI will start handling all its operations on this new camera.

Here is a video showcasing GPUI's occlusion culling feature in action:

How does Hi-Z Occlusion Culling work?

In occlusion culling (in general), the most important idea is to never cull visible objects. After this, the second-most important idea is to cull fast. GPUI's occlusion culling algorithm makes the camera generate a depth texture and uses this to make culling decisions in the compute shaders. Culling is decided based on the bounding boxes of the instances: GPUI compares the instance bounds with the depth texture with respect to both their sizes and their depths. If the object instance is completely occluded by a part of the texture that has a closer value, then it is culled.

GPUI implements this technique by adding a command buffer to the main camera, and dispatching its depth information to a compute shader. The compute shader then calculates visibility as mentioned above and relays the visible instances to be rendered. Notice that this method does not involve a readback in the CPU, so that all the culling operations can be executed in the GPU before rendering.

There are various advantages of this; the main ones being that it works out of the box without the need to bake occlusion maps - and you can use culling with dynamic geometry that you don't even have to define as occluders. Furthermore, it is extremely fast given all the operations are executed in the GPU. However, the culling accuracy is ultimately limited by the precision of the depth buffer. On this point, GPUI analyzes the depth texture (by its mip levels), and decides how accurate it can be without compromising performance and culling actually visible instances.

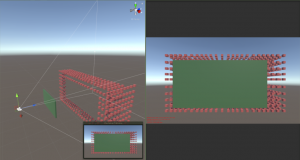

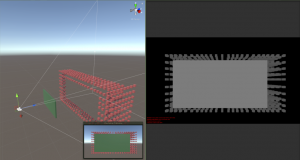

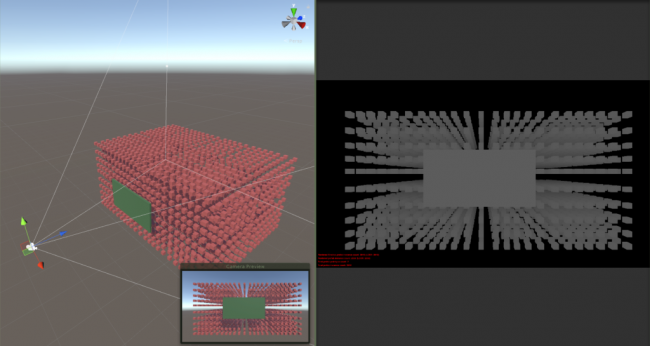

As you can see in the images below, the depth texture is a grayscale representation of the camera view where white is close to the camera and black is far away.

As the distance between the occluder and the instances become shorter with respect to the distance from the camera, their depth representations come closer to the same color:

In short, given the precision of the depth buffer, GPUI makes the best choice to cull instances for better performance - but also without any chance to cull any visible objects.